Pages: 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36

| sureanem |

Posted on 19-07-03, 13:03 in Blackouts

|

|

Stirrer of Shit

Post: #461 of 717 Since: 01-26-19 Last post: 1544 days Last view: 1542 days |

Posted by Kawa This. On a more practical note: for your browsing, have you tried blocking images, or even going by elinks+SSH? It should save a lot of data, while keeping sites relatively intact. There was a certain photograph about which you had a hallucination. You believed that you had actually held it in your hands. It was a photograph something like this. |

| sureanem |

Posted on 19-07-03, 21:15 in Something about cheese! (revision 1)

|

|

Stirrer of Shit

Post: #462 of 717 Since: 01-26-19 Last post: 1544 days Last view: 1542 days |

Posted by wertigon Okay, so they assume they're dealing with a uniform distribution (e.g. of probabilities) and then use this math to get a value between 0 and 1 which they then plug in to the inverse cdf. But why can't they use the inverse cdf directly? They're calculating an expected value for each rank, not using the kth-order pdf for it for anything interesting. Shouldn't the expected value for this rank be closely approximated by cdf-1((rank+1/2)/total)? Intuitively, the top player among ten players is better than 90-100% of them, the second best is better than 80-90%, ..., the tenth best is better than 0-10%. And you'd need these statistics to figure out which of these values are more likely, but for large n (say, n > 100) the difference is very small. Considering Elo scores only have four significant digits, possibly less, this approximation shouldn't affect the accuracy much, and makes it far easier to reason about. It holds up in testing, so why isn't it correct? Sympy could help with the bigger calculations, but more specifically try out this: I tried it with rank 3000, and it claimed the answer was 3.39959172783327 * 10143981. How exactly am I supposed to input it? Tried this: ((1500 + 1.25*350) + 0.3*350*exp(0.5*(log(2)+log(pi)+log(60000))+60000*log(60000)-1 - 0.5*(log(2)+log(pi)+log((60000-3000))+(60000-3000)*log((60000-3000))-1 + 3000*log(60000)))*(log(60000)-(ceiling(log(3000-1)))))-((1500 + 1.25*350) + 0.3*350*exp(0.5*(log(2)+log(pi)+log(6000))+6000*log(6000)-1 - 0.5*(log(2)+log(pi)+log((6000-3000))+(6000-3000)*log((6000-3000))-1 + 3000*log(6000)))*(log(6000)-(ceiling(log(3000-1))))) EDIT: oops, forgot a / in a closing tag There was a certain photograph about which you had a hallucination. You believed that you had actually held it in your hands. It was a photograph something like this. |

| sureanem |

Posted on 19-07-03, 21:46 in Something about cheese! (revision 1)

|

|

Stirrer of Shit

Post: #463 of 717 Since: 01-26-19 Last post: 1544 days Last view: 1542 days |

This would work for any data set though, wouldn't it? I don't see how it's overfitting. They assume it's a normal distribution already, so this would be no change from how things were before. EDIT: And of course, the two models should still give the same predictions around the median regardless of any inaccuracy, which is my main gripe with it. There was a certain photograph about which you had a hallucination. You believed that you had actually held it in your hands. It was a photograph something like this. |

| sureanem |

Posted on 19-07-04, 13:26 in I have yet to have never seen it all.

|

|

Stirrer of Shit

Post: #464 of 717 Since: 01-26-19 Last post: 1544 days Last view: 1542 days |

So, uh, how do these two files differ? There was a certain photograph about which you had a hallucination. You believed that you had actually held it in your hands. It was a photograph something like this. |

| sureanem |

Posted on 19-07-04, 21:32 in Something about cheese! (revision 2)

|

|

Stirrer of Shit

Post: #465 of 717 Since: 01-26-19 Last post: 1544 days Last view: 1542 days |

Posted by wertigon Because n! gets changed to exp(ln(n!)), where ln(n!) is a far longer expression, and H(x) gets changed to round(γ + ln(x)). Incidentally, H(n) can be written as follows: Yeah, that'd work too. With this it should be possible to reach a good enough accuracy with a symbolic calculator (e.g. a calculator that understands the notion of 100! / 101!), at least for the purpose of determining the difference in ELO. OK, so that worked. Because I'm lazy, I hardcode in the constants. You've got about 60k men and 6k women. Average man should be ranked 30k, average woman 3k. Average man: = 1937.5 Average woman: = 1937.5 So, the same averages. This is not what the dataset indicates, as per the graph. The average woman is far worse than the average man, by about one standard deviation. Do you see my gripe with the model and broader study now? EDIT: Oops, wrong formula EDIT2: Huh, how can the average be 1937 when I put it down as 1500? See, this is why psychologists shouldn't play around with statistics. There was a certain photograph about which you had a hallucination. You believed that you had actually held it in your hands. It was a photograph something like this. |

| sureanem |

Posted on 19-07-04, 21:54 in I have yet to have never seen it all.

|

|

Stirrer of Shit

Post: #466 of 717 Since: 01-26-19 Last post: 1544 days Last view: 1542 days |

Man, I'm disappointed. What's the deal with the images, Galicians are short? There was a certain photograph about which you had a hallucination. You believed that you had actually held it in your hands. It was a photograph something like this. |

| sureanem |

Posted on 19-07-05, 08:49 in Something about cheese!

|

|

Stirrer of Shit

Post: #467 of 717 Since: 01-26-19 Last post: 1544 days Last view: 1542 days |

Posted by funkyass Yeah, sure. Players gain or lose rank based on how unlikely the win was. So if someone with a low score plays against someone with a high score and wins 50/50, they'll converge rapidly. Whereas, if the player with a high score wins there's pretty much no change. In practice, you can just assume that they already have converged for all players who aren't complete rookies. Posted by wertigonI wouldn't think there's anything wrong with the calculations. The replication study also said it gave very high results, like estimating the top German player above the current world champion. Plugging in k = 7, it says that the gap according to the model would be 228-298 points, depending on your values for n. This is about what the study finds too. If you're saying the model is horribly broken and does not accurately reflect reality, then that makes sense. I've yet to understand what was wrong with the far simpler model that can be calculated on a better pocket calculator. There was a certain photograph about which you had a hallucination. You believed that you had actually held it in your hands. It was a photograph something like this. |

| sureanem |

Posted on 19-07-05, 22:39 in Something about cheese!

|

|

Stirrer of Shit

Post: #468 of 717 Since: 01-26-19 Last post: 1544 days Last view: 1542 days |

The study doesn't do that either though. c1 and c2 are poorly explained magic constants, m and s are stated as taken for the whole population. If I'd change the model to have different means for men and women, it just seems like a convoluted way to restate my original statement (women are worse at chess than men) - it would be patently absurd to claim that the difference in skills is accounted for by... a difference in skills, and that this proves men are not more skilled than women. Just to be clear: the claim the study makes is that differences in means for the two populations do not explain the skill gap, but rather that the skill gap is solely (or to 96%, anyway) explained by the fact that you've got more men playing chess than you've got women, which means you'd get more extreme scorers and so on and so forth, but that this only holds on the tails. There was a certain photograph about which you had a hallucination. You believed that you had actually held it in your hands. It was a photograph something like this. |

| sureanem |

Posted on 19-07-07, 12:07 in (Mis)adventures on Debian ((old)stable|testing|aghmyballs)

|

|

Stirrer of Shit

Post: #469 of 717 Since: 01-26-19 Last post: 1544 days Last view: 1542 days |

A step in the right direction. But couldn't they have done it the other way around? Binaries in /bin, root binaries also in /bin (chmod 754), libraries Evacuate /usr entirely save for symlinks, then come 10 years maybe they can delete it. There was a certain photograph about which you had a hallucination. You believed that you had actually held it in your hands. It was a photograph something like this. |

| sureanem |

Posted on 19-07-07, 15:45 in (Mis)adventures on Debian ((old)stable|testing|aghmyballs)

|

|

Stirrer of Shit

Post: #470 of 717 Since: 01-26-19 Last post: 1544 days Last view: 1542 days |

I thought something like stali'd be cool:Filesystem If we're discussing cool filesystem hierarchies, why not GoboLinux? Imagine that with static linking and each program in its own jail. Would be super cool. (Of course, they should drop the Windows-esque capitalization, but you can't have it all) While we're at it, /var/log should be mounted as tmpfs, there should be an option like noatime for ctime and mtime, there should be an option to clamp timestamps (e.g. anything older than X days gets rounded to Jan 1, 1970), or to use a monotonic but otherwise random clock. Furthermore, applications shouldn't get to write files willy-nilly without my consent. In particular, none of the damn log files that I did not request or know about. GTK (?) is the worst offender, requiring this ugly hack to respect my privacy: Maybe each package could have "permissions," like on Android. So something like GIMP wouldn't ever have the "state" permission, and all files (except for those created through the save file dialog) would be written to a temporary overlay filesystem that goes away when you close GIMP. /pipedream Could something like "take a snapshot of the OS installed files" realistically be accomplished? For instance, Debian's torbrowser-launcher puts its binaries in ~/.local/share/torbrowser/tbb/x86_64/tor-browser_en-US/Browser/, while Firefox lives in /usr/lib/firefox-esr/. Wouldn't everything break if you removed /etc, for instance? It's probably far more suitable to force people into gradually upgrading approaching the local maximum than it is to have them do a giant leap and hope they don't resist. If competently implemented, the end goal is the same. I mean, compare the success of the early 2000's DRM schemes (CPPM and friends) to the success of the current ones. Boiling frog and all. In ten years piracy won't even be possible. There was a certain photograph about which you had a hallucination. You believed that you had actually held it in your hands. It was a photograph something like this. |

| sureanem |

Posted on 19-07-07, 18:34 in Monocultures in Linux and browsers (formerly "Windows 10")

|

|

Stirrer of Shit

Post: #471 of 717 Since: 01-26-19 Last post: 1544 days Last view: 1542 days |

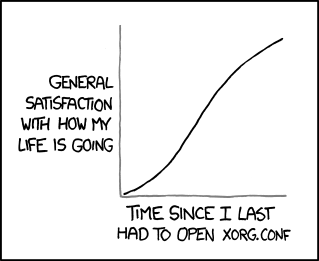

The death watch for the X Window System (aka X11) has probably started Probably the first time I am excited about the death of legacy technology. With that and AppImages, my two biggest gripes with Linux will be gone. With Mozilla's coming Tor support, I can actually feel kind of optimistic about the future of technology if I don't look too hard.  There was a certain photograph about which you had a hallucination. You believed that you had actually held it in your hands. It was a photograph something like this. |

| sureanem |

Posted on 19-07-07, 23:25 in (Mis)adventures on Debian ((old)stable|testing|aghmyballs)

|

|

Stirrer of Shit

Post: #472 of 717 Since: 01-26-19 Last post: 1544 days Last view: 1542 days |

Posted by CaptainJistuce Windows does actually have a sensible approach to software. Each program gets its own directory and contains whatever DLL files it needs. Sure, there are some kludges (AppData, registry), but those are present on Linux too. In an ideal world, each file created by a program would be "tagged", so that the removal of the program would guarantee the removal of all the files it created (except those the user took ownership of, obviously), but since we do not happen to live there we unfortunately have to put up with installers/package managers, both of which are flaky and unpleasant. Ultimately, I think we should just throw out the whole package manager thing. Each package gets distributed as an AppImage, but stripped of its dependencies which are replaced with their hashes. Then, upon execution, they get lazy-loaded, either from cache or from the Internet, and added to the AppImage. Then the filesystem handles deduplication. Maybe the last step could be skipped by just not adding them to the AppImage. This means 1) packages are as small as before 2) packages have no dependencies, just latency 3) cached packages can be deleted at random and have no integrity requirements, being just SHA256-identified blobs (although doing this without an Internet connection might break stuff) 4) there is no need for a central server, as BitTorrent can be used (although you would like one since it'd be a shame to not have enough seeders for Firefox) 5) since packages are aware of all their dependencies, sandboxing becomes trivial 6) packages can declare upfront what privileges to request from the sandbox, like mobile apps You might say that this is just re-inventing package managers, but consider that the complexity is far smaller. For starters, no dependency trees. Perhaps more importantly: unused applications' dependencies can be trivially pruned, without having to reason about whether they might be used at one point in the future. Or conversely, the size of dependencies can be held constant, although this produces non-deterministic behavior without Internet access. I think this could be possible if you went with a hybrid system: XFCE and such get installed with the usual package manager, but applications without such deep tendrils (think Firefox, and most other software on your system) get the AppImage treatment. There was a certain photograph about which you had a hallucination. You believed that you had actually held it in your hands. It was a photograph something like this. |

| sureanem |

Posted on 19-07-07, 23:37 in Monocultures in Linux and browsers (formerly "Windows 10")

|

|

Stirrer of Shit

Post: #473 of 717 Since: 01-26-19 Last post: 1544 days Last view: 1542 days |

I might be one of them UNIXoids, and I sure don't like X. That thing is the opposite of minimal. The only reason it got ahead in the first place was the first-movers' advantage and 1990's craziness. Then again, Windows is a better implementation of the real Unix philosophy (Worse is better), and its GUI is pretty much perfect (or was, anyway, except for the Aero debacle and the Metro debacle and the Windows 10 start menu debacle), so I might be biased here. As for UXtardation, there's a simple heuristic you can use. Go on the website. Does it look like it was designed by a sane person? Then the project is probably sane too. Wayland is probably the first technology in a long time (other than cryptocurrencies, and AppImages as I said above) I'm bullish on. There was a certain photograph about which you had a hallucination. You believed that you had actually held it in your hands. It was a photograph something like this. |

| sureanem |

Posted on 19-07-08, 00:32 in (Mis)adventures on Debian ((old)stable|testing|aghmyballs)

|

|

Stirrer of Shit

Post: #474 of 717 Since: 01-26-19 Last post: 1544 days Last view: 1542 days |

Well, sure, there are some kludges, but in essence that's how it works, isn't it? Each program has some .dll files shipped with it and it has a folder in the Program Files/ hierarchy. I can't ever remember Firefox or whatever hassling me about dependencies on Windows, except for .NET and friends, but those are practically part of the system anyway. There was a certain photograph about which you had a hallucination. You believed that you had actually held it in your hands. It was a photograph something like this. |

| sureanem |

Posted on 19-07-08, 02:04 in (Mis)adventures on Debian ((old)stable|testing|aghmyballs)

|

|

Stirrer of Shit

Post: #475 of 717 Since: 01-26-19 Last post: 1544 days Last view: 1542 days |

Hardly anyone except for people porting Linux software uses MinGW/Cygwin, do they? When in Rome, do as the Romans do... I can't remember once having had to reason about the dependencies of an application, or have an application require 200 MB of mystery meat libraries with scary-sounding names to run. There was a certain photograph about which you had a hallucination. You believed that you had actually held it in your hands. It was a photograph something like this. |

| sureanem |

Posted on 19-07-08, 12:15 in (Mis)adventures on Debian ((old)stable|testing|aghmyballs)

|

|

Stirrer of Shit

Post: #476 of 717 Since: 01-26-19 Last post: 1544 days Last view: 1542 days |

Posted by BearOso Is that really a real-world concern? Windows isn't free either, but it's not common to pay for it (save for OEM). Posted by CaptainJistuce I don't see it as a kludge, it's a beautifully clean solution. No dependencies, no problem. That said, you don't SEE the dynamically-linked libraries the program DOESN'T carry in its install directory, which is decidedly non-zero. That's true. But they can't be that big, can they? So... common libraries don't count as libraries? Sufficiently common ones don't. If an application depends on glibc, well that's like saying it "depends" on you having a CPU inside the computer, isn't it? glibc is a part of the OS, just like .NET. Why they don't ship it with I don't know. On a long enough timeline, all Windows installs end up with .NET installed. Posted by Nicholas Steel And the installer will get most of the libraries it needs to install installed silently. It is only the collections with separate installers(DirectX, Dot Net) that you ever see. And, well, that's most of the responsibility of an installer. They aren't just a self-extracting ZIP file. (Similarly, that's why you've long been encouraged to use the uninstaller instead of just deleting the directory, it lets the system know the program is no longer using those DLLs and gives it the option of deleting them if no one uses them) Touché. Still. You would notice if the installer was several hundred megs. It's very rare for installers to download things over the internet. And you can notice it's rare for installers to be that big. Certainly, the more robust Lunix package management system leads itself to elaborately tangled webs of dependencies, whereby installing a Super Nintendo emulator will bring in printer support as a dependency(because of some of the fonts used, if I recall), or byuu's old goto example of a graphical front-end for command-line CD burner software changing his init system. Well, they could make a better trade. I agree it's a trade-off, though. Posted by Screwtape Well, you just refer to it through the package repo's name for it or by hash, problem solved. My suggestion is very similar, just that it wouldn't include the libraries, just their hashes, and then the OS downloads them from the repos. If it's running low on disk space, it can delete big libraries at random without much happening. Posted by creaothceann When do you want to delete glibc though? The program itself would still be distributed like normal. There was a certain photograph about which you had a hallucination. You believed that you had actually held it in your hands. It was a photograph something like this. |

| sureanem |

Posted on 19-07-08, 12:30 in Something about cheese!

|

|

Stirrer of Shit

Post: #477 of 717 Since: 01-26-19 Last post: 1544 days Last view: 1542 days |

Posted by wertigon No, only for the upper echelons. The paper doesn't put forth that hypothesis at all. Its claim is that, if you have 1000 men and 100 women, the top 10 men will have a higher average score than the top 10 women, because the top 10 men represent the 99th percentile but the top 10 women only the 90th. Suppose we have a total population size (Z) of 1000 individuals. In this pool size, we have 900 people from one group (X), and 100 people from a different group (Y). How's that work then? You become less skilled by being in a smaller group? That makes no sense. If you give a country with 10 million people some test, and each region has 1 million people, then those regions should have a lower average score by virtue of having a smaller population than the country as a whole. This is not mathematically possible. Another example: in the PISA rankings, Singapore and Finland both score highly despite being rather small countries. If no difference exist, X and Y should have the same mean. But since they do, X and Y should have different means, especially compared to Z. This is why I think you made a mistake in your model calculations. You can't just do that though. The study uses the same mean for them. If they didn't do that then the model wouldn't be consistent with their claims, see above. Either way a correlation has been proven. Perhaps a ML method could shed further light upon this. But, yes. I think we have reached the end for now. How'd ML help? It'd just be a roundabout way of doing regression. There was a certain photograph about which you had a hallucination. You believed that you had actually held it in your hands. It was a photograph something like this. |

| sureanem |

Posted on 19-07-08, 13:50 in Board feature requests/suggestions

|

|

Stirrer of Shit

Post: #478 of 717 Since: 01-26-19 Last post: 1544 days Last view: 1542 days |

So say you'd want to discuss yourself some politics. Should you make a new thread or post it in the Something about chess! thread (formerly Politics!)? There was a certain photograph about which you had a hallucination. You believed that you had actually held it in your hands. It was a photograph something like this. |

| sureanem |

Posted on 19-07-08, 16:56 in Something about cheese!

|

|

Stirrer of Shit

Post: #479 of 717 Since: 01-26-19 Last post: 1544 days Last view: 1542 days |

Primaries are coming up. Republican ones won't be very interesting to follow, but what about the Democrats'? Specifically, will they go for Harris or Sanders? Obviously, Biden would be electable like nothing else. He'd win the general against Trump with near absolute certainty. But the damage done to the party would be far too great. After having alienated the socially moderate fiscally liberate wing (Sanders), they'd proceed to alienate the socially liberal fiscally moderate wing (Clinton), leaving them without any base. This kills the party. Consider that in ten years or so the Democrats'll have it all locked down. TX was just a few percent from going blue, and as they saying goes, once you go blue, you never go back. They wouldn't want to risk this just to get a president in when they know they can just wait a few years and have that whole thing locked down. Also consider that Trump isn't doing much to prevent this or really anything which risks their electoral prospects in the long term (e.g. citizenship question on census, deportations). And with the coming recession, they've got him right where they'd want him to be. With Trump in the White House, it's trivial to blame the recession on his trade war, and then pull a repeat of 2008. It follows then that who they're picking to run for President will not really factor in electability, since they'd rather outright throw this one and make their move in 2024 when they've got a perfect storm. This rules out Biden, unless their decision-makers have an extraordinarily low time preference. The opposite of Biden, who'd be quite unelectable, but do a very good job of bringing young people back into the fold, is of course Sanders. With him, they can both have their demographic cake and eat it. Someone prone to wild speculation could even theorize they cut such a deal with him way back in 2016 so that he wouldn't stir up such a ruckus conceding, which a lot of people found quite odd. So I'd guess they'll go with Sanders. The flip side is of course if the decision-makers would prefer rushing to force their loyalist through. And I'm not sure if the Zeitgeist is such. There was a lot of internal hype over Clinton back in 2016 because she was the obvious successor to Obama, which there doesn't seem to be for Harris. On the other hand, it's possible they'd want to double down and go with the candidate the most dissimilar to Trump they can find. Which Sanders obviously isn't - just look at the large numbers of people who are bullish on Sanders and Trump but bearish on Romney and Clinton. There was a certain photograph about which you had a hallucination. You believed that you had actually held it in your hands. It was a photograph something like this. |

| sureanem |

Posted on 19-07-08, 17:26 in (Mis)adventures on Debian ((old)stable|testing|aghmyballs)

|

|

Stirrer of Shit

Post: #480 of 717 Since: 01-26-19 Last post: 1544 days Last view: 1542 days |

Posted by BearOsoWe're not living in the 90's anymore, gramps. There was a certain photograph about which you had a hallucination. You believed that you had actually held it in your hands. It was a photograph something like this. |